Abstract

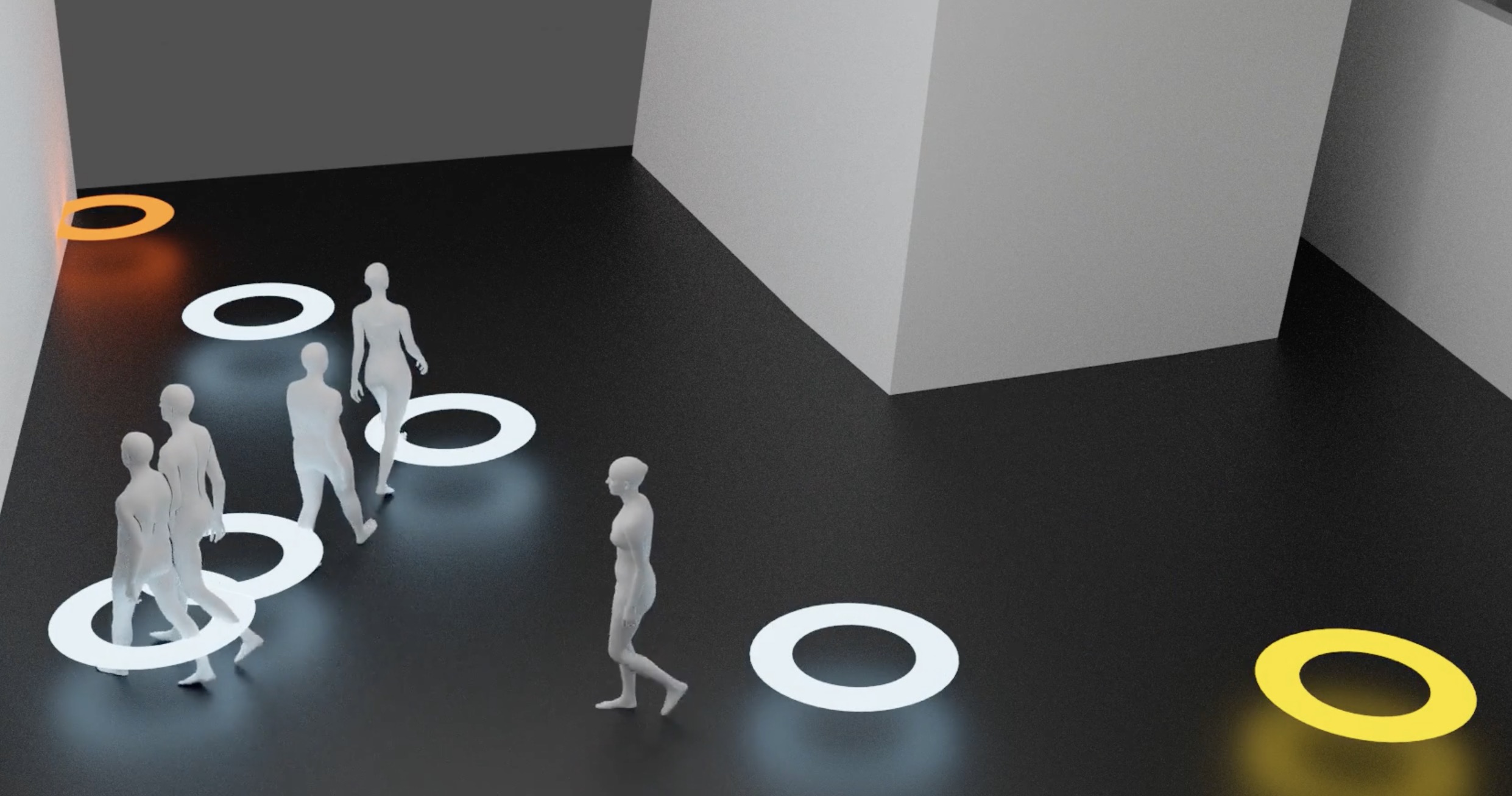

Our goal is to populate digital environments, in which the digital humans have diverse body shapes, move perpetually, and have plausible body-scene contact. The core challenge is to generate realistic, controllable, and infinitely long motions for diverse 3D bodies. To this end, we propose generative motion primitives via body surface markers, shortened as GAMMA. In our solution, we decompose the long-term motion into a time sequence of motion primitives. We exploit body surface markers and conditional variational autoencoder to model each motion primitive, and generate long-term motion by implementing the generative model recursively. To control the motion to reach a goal, we apply a policy network to explore the model latent space, and use a tree-based search to preserve the motion quality during testing. Experiments show that our method can produce more realistic and controllable motion than state-of-the-art data-driven method. With conventional path-finding algorithms, the generated human bodies can realistically move in the scene for a long distance and a long time.