Basic Information

I am a PhD student at ETH Zurich , co-advised by Prof. Siyu Tang and Prof. Andreas Geiger. My research topic is computer vision, specifically in building controllable neural implicit representations for human bodies with clothes. I am also interested in differentiable combinatorial optimization and its application in computer vision. Before I came to ETH, I worked as a researcher in Kording Lab at University of Pennsylvania. Even before that, I worked as a senior system engineer at the autonomous driving group of Baidu. I got my Master's degree from University of California, Irvine under supervision of Prof. Charless Fowlkes

Social

Publications

Authors:Shaofei Wang, Tomas Simon, Igor Santesteban, Timur Bagautdinov, Junxuan Li, Vasu Agrawal, Fabian Prada, Shoou-I Yu, Pace Nalbone, Matt Gramlich, Roman Lubachersky, Chenglei Wu, Javier Romero, Jason Saragih, Michael Zollhoefer, Andreas Geiger, Siyu Tang, Shunsuke Saito

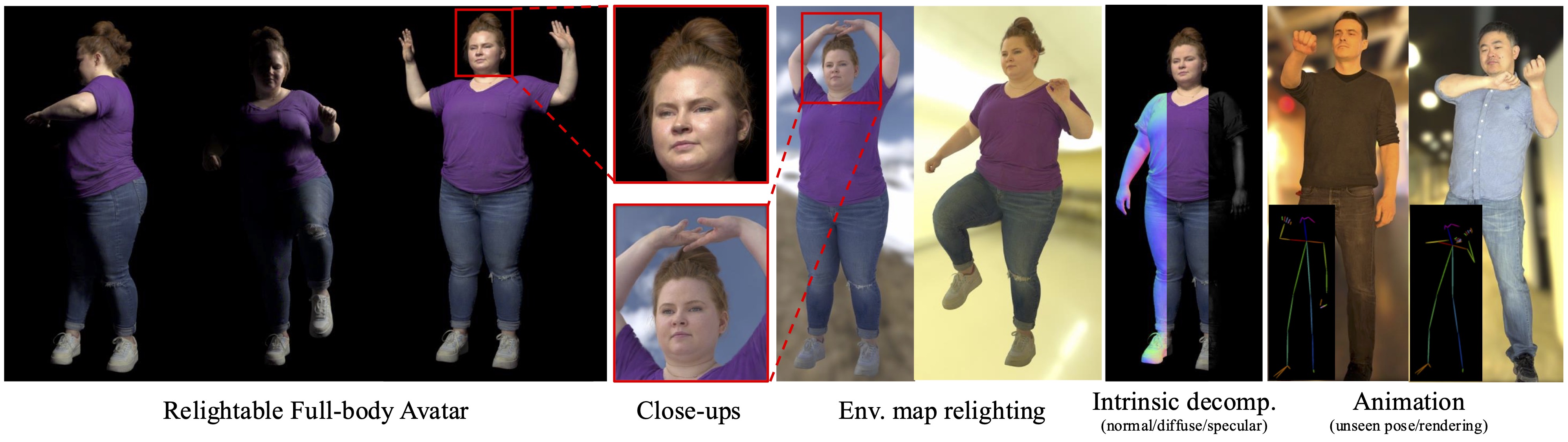

RFGCA learns high-fidelity relightable and drivable full-body avatars from light stage captures.Authors:Deheng Zhang*, Jingyu Wang*, Shaofei Wang, Marko Mihajlovic, Sergey Prokudin, Hendrik P.A. Lensch, Siyu Tang (*equal contribution)

We present RISE-SDF, a method for reconstructing the geometry and material of glossy objects while achieving high-quality relighting.Authors:Shaofei Wang , Božidar Antić , Andreas Geiger , Siyu Tang

IntrinsicAvatar learns relightable and animatable avatars from monocular videos, without any data-driven priors.Authors:Xiyi Chen , Marko Mihajlovic , Shaofei Wang , Sergey Prokudin , Siyu Tang

We introduce a morphable diffusion model to enable consistent controllable novel view synthesis of humans from a single image. Given a single input image and a morphable mesh with a desired facial expression, our method directly generates 3D consistent and photo-realistic images from novel viewpoints, which we could use to reconstruct a coarse 3D model using off-the-shelf neural surface reconstruction methods such as NeuS2.Authors:Zhiyin Qian, Shaofei Wang, Marko Mihajlovic, Andreas Geiger, Siyu Tang

Given a monocular video, 3DGS-Avatar learns clothed human avatars that model pose-dependent appearance and generalize to out-of-distribution poses, with short training time and interactive rendering frame rate.Authors:Kaifeng Zhao, Yan Zhang, Shaofei Wang, Thabo Beeler, Siyu Tang

Interaction with environments is one core ability of virtual humans and remains a challenging problem. We propose a method capable of generating a sequence of natural interaction events in real cluttered scenes.Authors:Shaofei Wang, Katja Schwarz, Andreas Geiger, Siyu Tang

Given sparse multi-view videos, ARAH learns animatable clothed human avatars that have detailed pose-dependent geometry/appearance and generalize to out-of-distribution poses.Authors:Kaifeng Zhao, Shaofei Wang, Yan Zhang, Thabo Beeler, Siyu Tang

Synthesizing natural interactions between virtual humans and their 3D environments is critical for numerous applications, such as computer games and AR/VR experiences. We propose COINS, for COmpositional INteraction Synthesis with Semantic Control.Authors:Shaofei Wang, Marko Mihajlovic, Qianli Ma, Andreas Geiger, Siyu Tang

MetaAvatar is meta-learned model that represents generalizable and controllable neural signed distance fields (SDFs) for clothed humans. It can be fast fine-tuned to represent unseen subjects given as few as 8 monocular depth images.Authors:Shaofei Wang, Andreas Geiger and Siyu Tang

Registering point clouds of dressed humans to parametric human models is a challenging task in computer vision. We propose novel piecewise transformation fields (PTF), a set of functions that learn 3D translation vectors which facilitates occupancy learning, joint-rotation estimation and mesh registration.